Many solutions in the cloud involve running tasks that invoke services. In this environment, if a service is subjected to intermittent heavy loads, it can cause performance or reliability issues

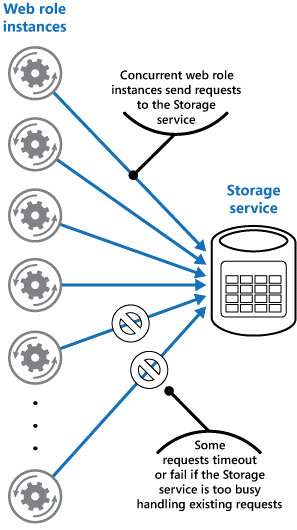

A service could be a component that is part of the same solution as the tasks that utilize it, or it could be a third-party service providing access to frequently used resources such as a cache or a storage service. If the same service is utilized by a number of tasks running concurrently, it can be difficult to predict the volume of requests to which the service might be subjected at any given point in time.

It is possible that a service might experience peaks in demand that cause it to become overloaded and unable to respond to requests in a timely manner. Flooding a service with a large number of concurrent requests may also result in the service failing if it is unable to handle the contention that these requests could cause.

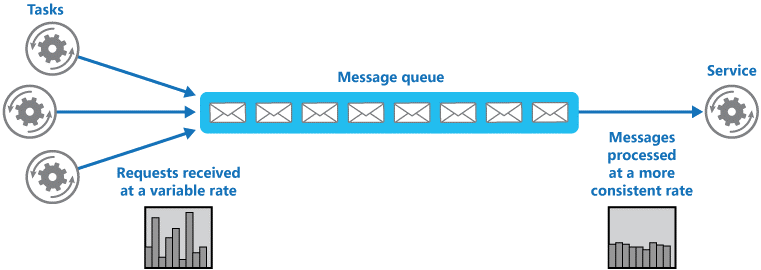

Refactor the solution and introduce a queue between the task and the service. The task and the service run asynchronously. The task posts a message containing the data required by the service to a queue. The queue acts as a buffer, storing the message until it is retrieved by the service. The service retrieves the messages from the queue and processes them. Requests from a number of tasks, which can be generated at a highly variable rate, can be passed to the service through the same message queue. Below shows this structure.

Using a queue to level the load on a service

Concurrent requests to a data store without a queue implementation

The queue effectively decouples the tasks from the service, and the service can handle the messages at its own pace irrespective of the volume of requests from concurrent tasks. Additionally, there is no delay to a task if the service is not available at the time it posts a message to the queue.

This pattern provides the following benefits:

This pattern is ideally suited to any type of application that uses services that may be subject to overloading.

This pattern might not be suitable if the application expects a response from the service with minimal latency.