Competing Consumers Pattern-RabbitMQ has a code implementation of this where priority is a flag and decides upon which queue to go to.

Applications may delegate specific tasks to other services; for example, to perform background processing or to integrate with other applications or services. In the cloud, a message queue is typically used to delegate tasks to background processing. In many cases the order in which requests are received by a service is not important. However, in some cases it may be necessary to prioritize specific requests. These requests should be processed earlier than others of a lower priority that may have been sent previously by the application.

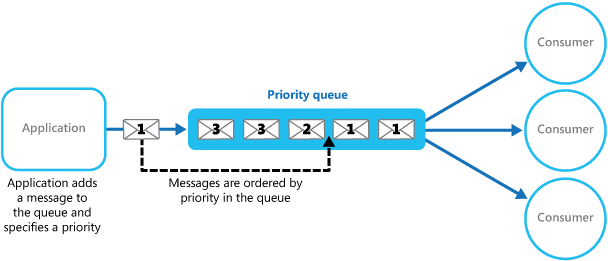

A queue is usually a first-in, first-out (FIFO) structure, and consumers typically receive messages in the same order that they were posted to the queue. However, some message queues support priority messaging; the application posting a message can assign a priority to a message and the messages in the queue are automatically reordered so that messages with a higher priority will be received before those of a lower priority. Below illustrates a queue that provides priority messaging.

Using a queuing mechanism that supports message prioritization

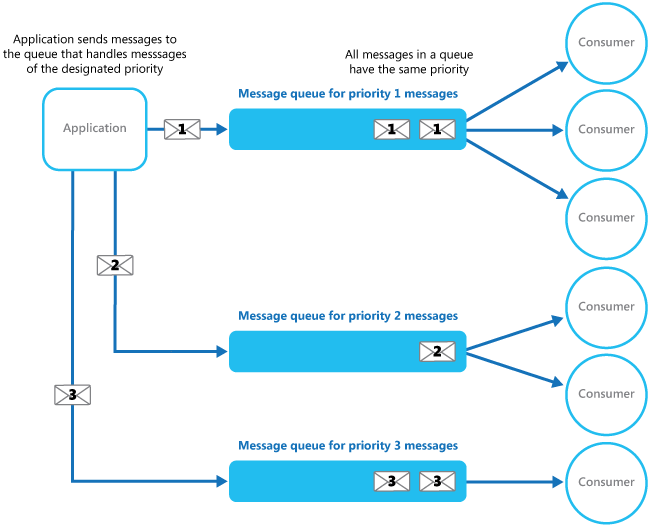

In systems that do not support priority-based message queues, an alternative solution is to maintain a separate queue for each priority. The application is responsible for posting messages to the appropriate queue. Each queue can have a separate pool of consumers. Higher priority queues can have a larger pool of consumers running on faster hardware than lower priority queues. Below shows this approach.

Using separate message queues for each priority

A variation on this strategy is to have a single pool of consumers that check for messages on high priority queues first, and then only start to fetch messages from lower priority queues if no higher priority messages are waiting. There are some semantic differences between a solution that uses a single pool of consumer processes (either with a single queue that supports messages with different priorities or with multiple queues that each handle messages of a single priority), and a solution that uses multiple queues with a separate pool for each queue.

In the single pool approach, higher priority messages will always be received and processed before lower priority messages. In theory, messages that have a very low priority may be continually superseded and might never be processed. In the multiple pool approach, lower priority messages will always be processed, just not as quickly as those of a higher priority (depending on the relative size of the pools and the resources that they have available).

Using a priority queuing mechanism can provide the following advantages:

This pattern is ideally suited to scenarios where: